Title page

会议/期刊:暂无

年份:2022

github链接:https://github.com/GewelsJI/DGNet

pdf链接: Public: https://arxiv.org/pdf/2205.12853.pdf Private暂无

Summary

- We propose to excavate the texture information via learning the object level gradient rather than using boundary-supervised or uncertainty-aware modeling in Camouflaged object detection field.

- 基于边界监督或基于不确定性的模型,其通常响应的是伪装对象的稀疏边缘,从而引入噪声,特别是对于复杂的场景

- 很多伪装物的边缘无法定义或模糊不清

- 尽管伪装度很高,但仍有一些遗留下来的线索——we are interested in how the network mines these ‘discriminative patterns’ inside the object.

- We propose a gradient-induced transition to automatically group features from the context and texture branches according to the soft grouping strategy.

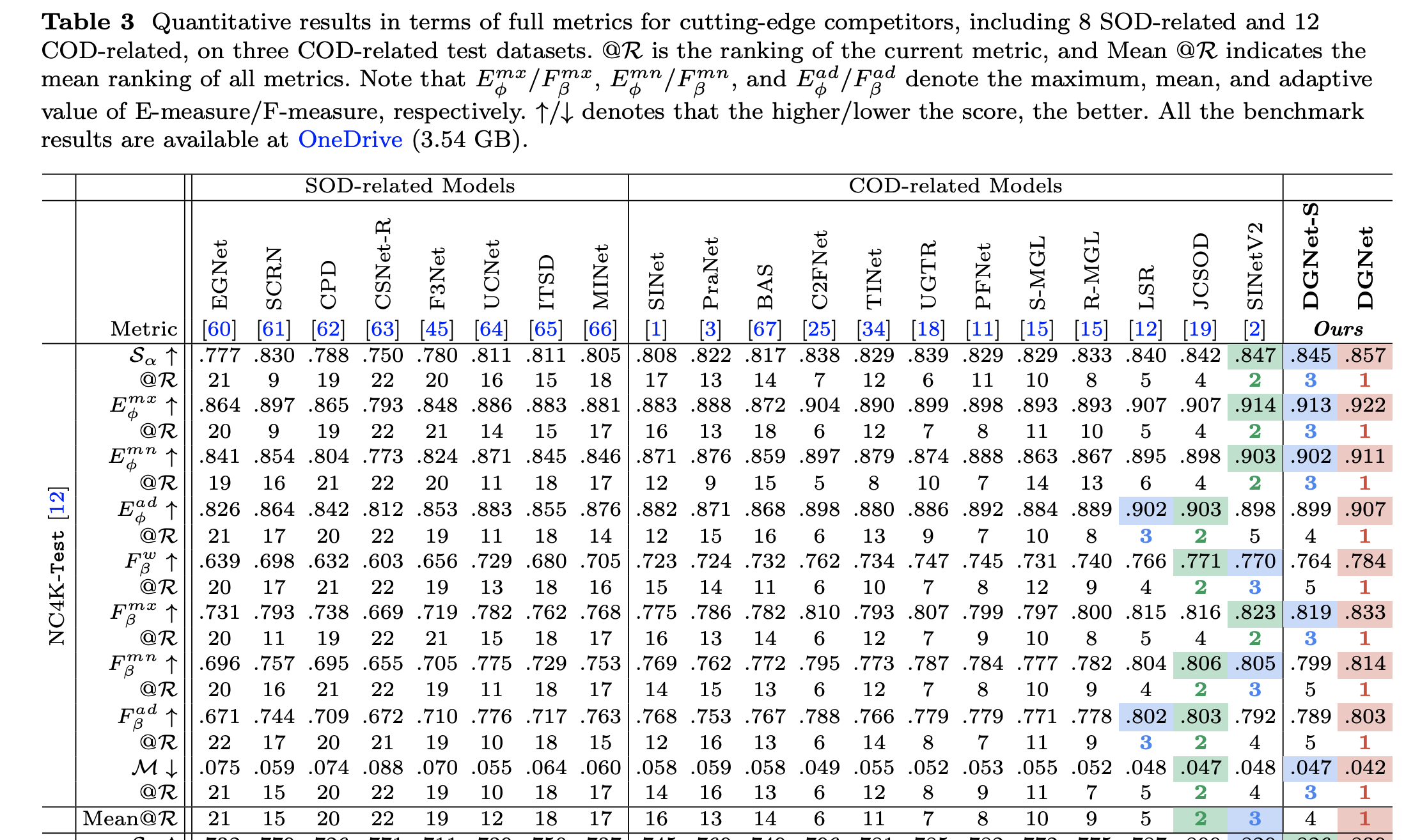

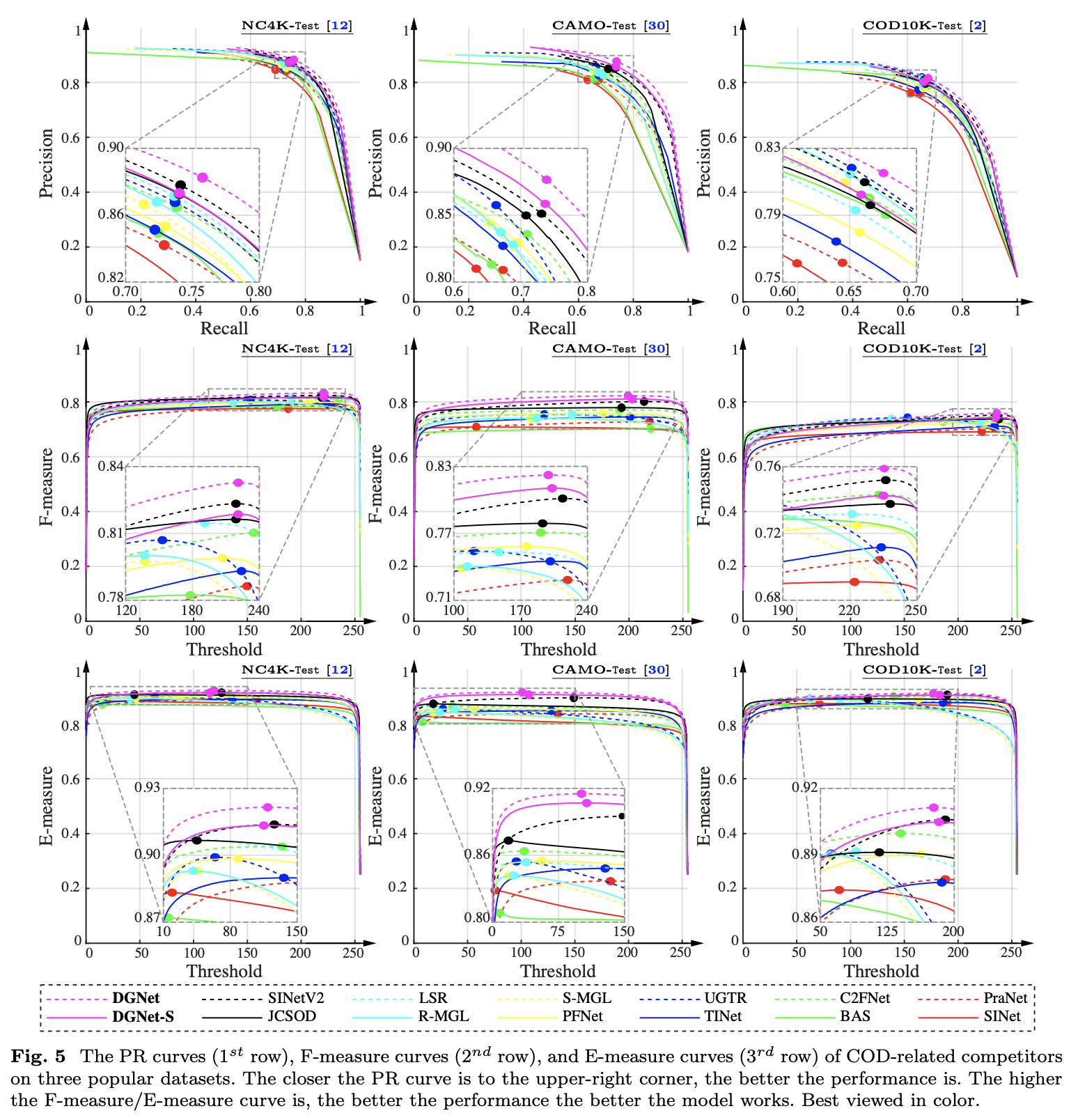

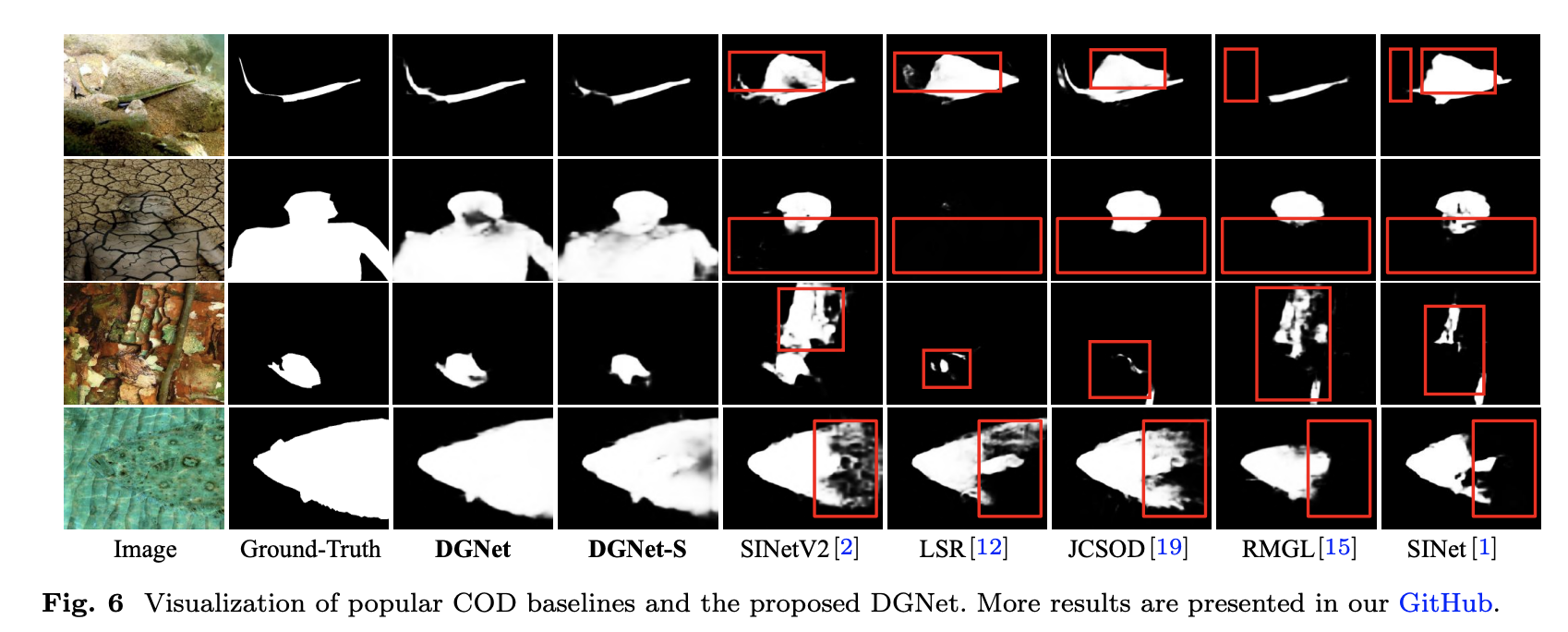

- DGNet outperforms existing state-of-the-art COD models by a large margin.

- DGNet-S, runs in real-time (80 fps) and achieves comparable results to the cutting-edge model JCSOD-CVPR21 with only 6.82% parameters.

- Application results also show that the proposed DGNet performs well in polyp segmentation, defect detection, and transparent object segmentation tasks.

Workflow

Methods

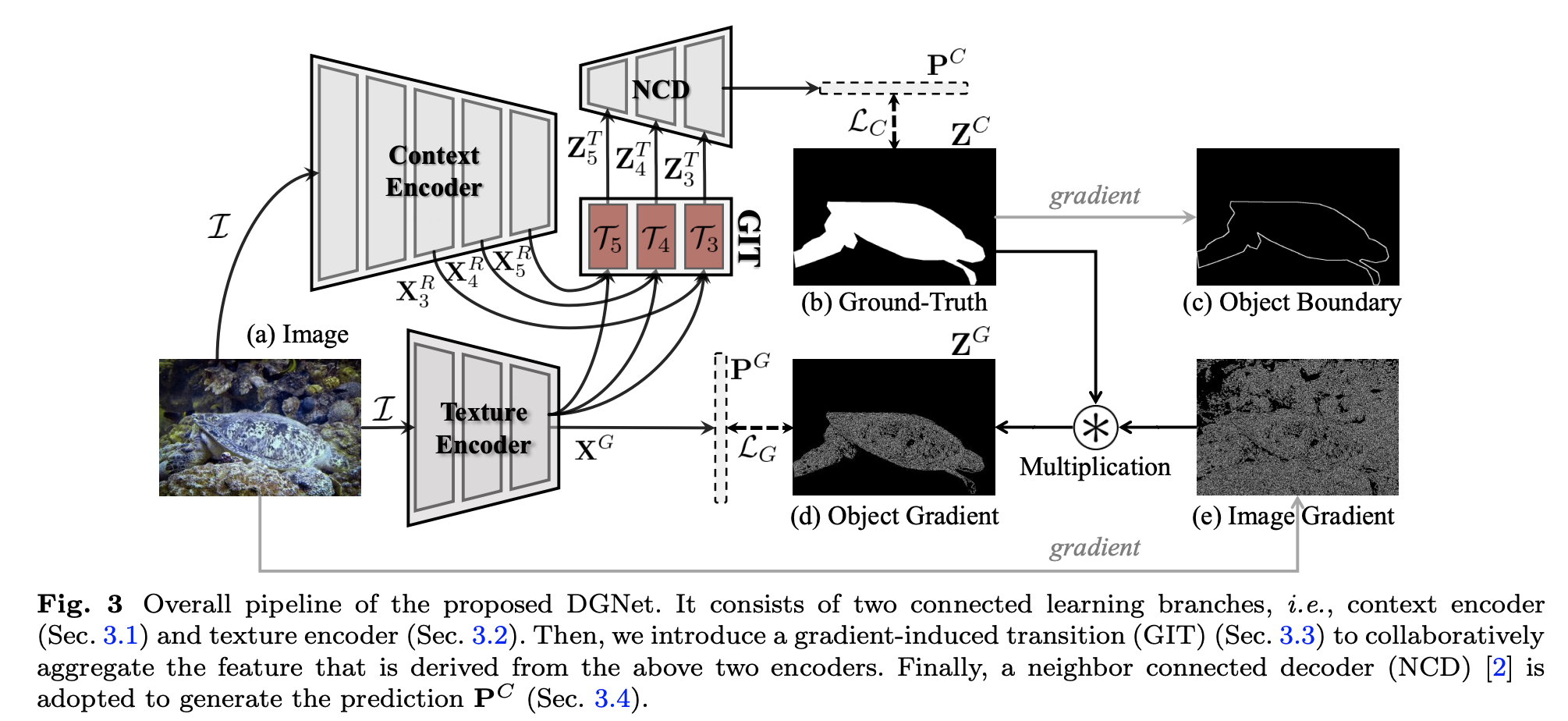

OverView

-

As discussed in Feature pyramid networks for object detection, 低维特征与高维特征一样重要

-

As suggested by Modnet: Real-time trimap-free portrait matting via objective decomposition, 不建议同时编码低维特征与高维特征.

-

综上,作者提出在伪装物识别中引入两个独立的编码器(内容编码器 context encoder和纹理编码器 texture encoder)

Context Encoder

-

使用了EfficientNet,提取5层金字塔特征

- 只取后三层富语义特征(affluent semantics

- 使用了两层堆叠 ConvBR $C_i$ × 3 × 3 → 降维,降低计算负担

- The final outputs are three context features ${X^R_i}^5_{i=3} ∈ R^{C_i×H_i×W_i}$

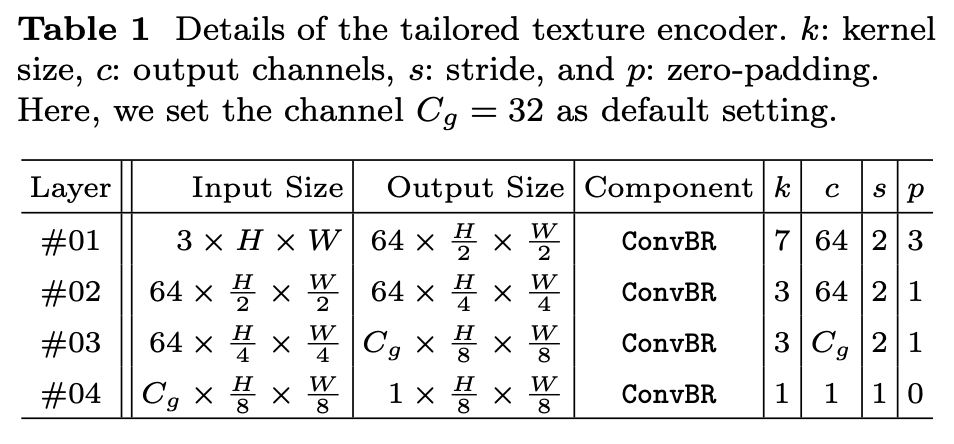

Texture Encoder

作者构建了一个object-level gradient map监督的纹理编码器分支,用于补偿Context Encoder中后三层特征中的弱几何纹理特征

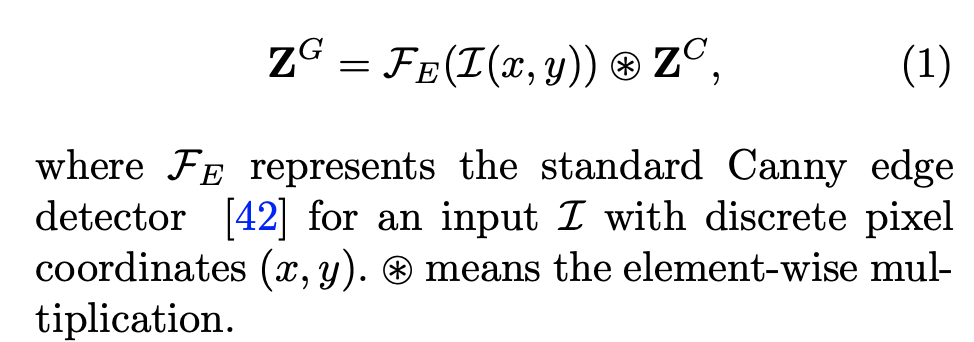

Object Gradient Generation

图像梯度:the directional change in an image’s intensity or color between adjacent positions.

考虑到全局图像梯度包含的背景噪声,作者构建了$Z_G$作为object-level gradient map,进行Texture Encoder的监督

Texture Encoder结构:Layer#04用于监督训练

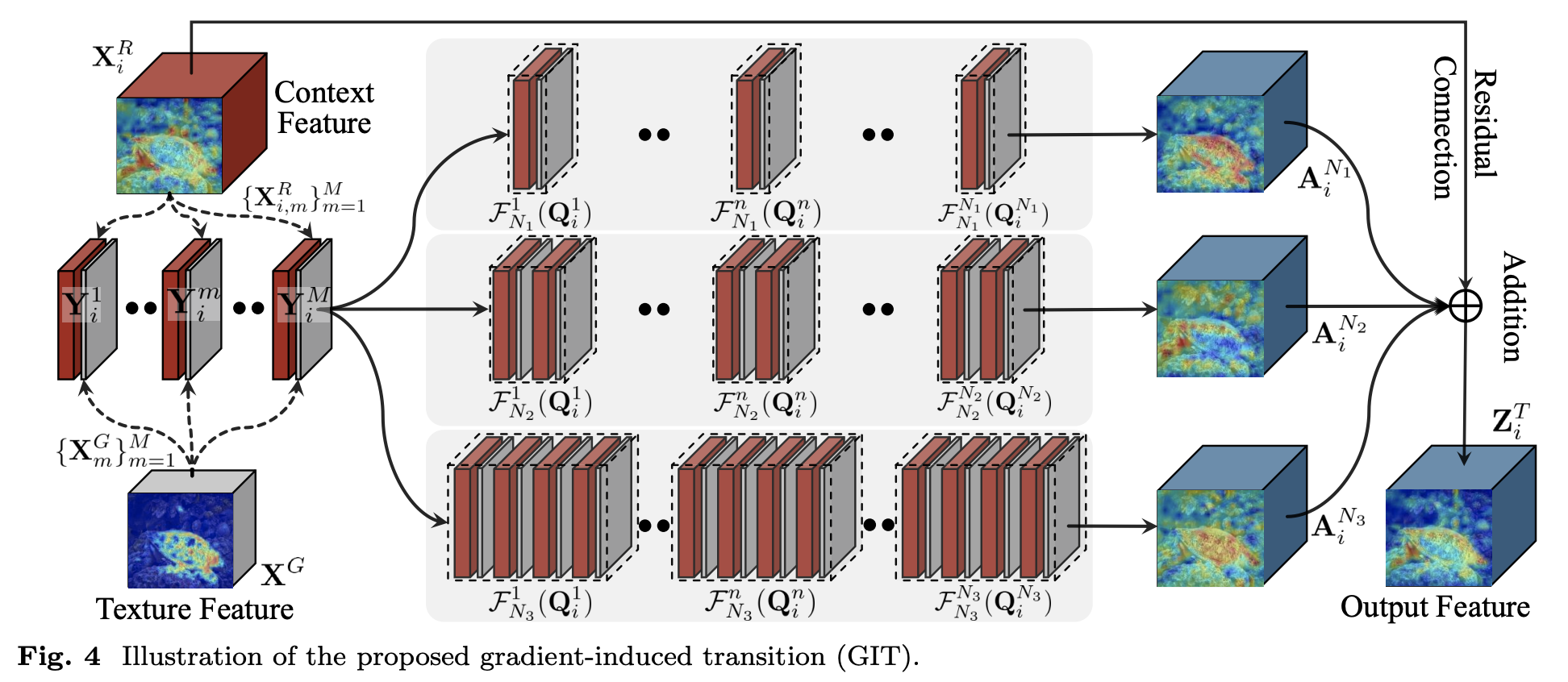

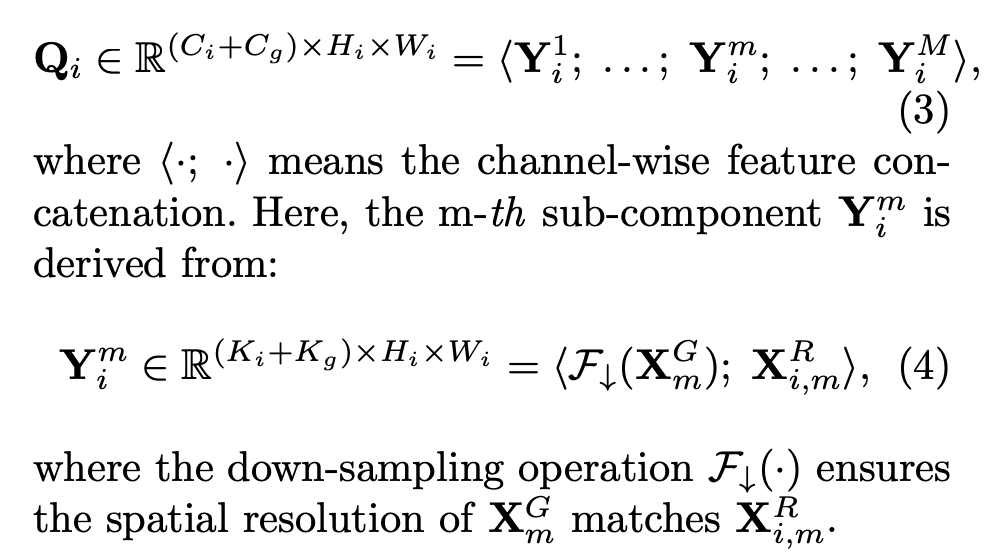

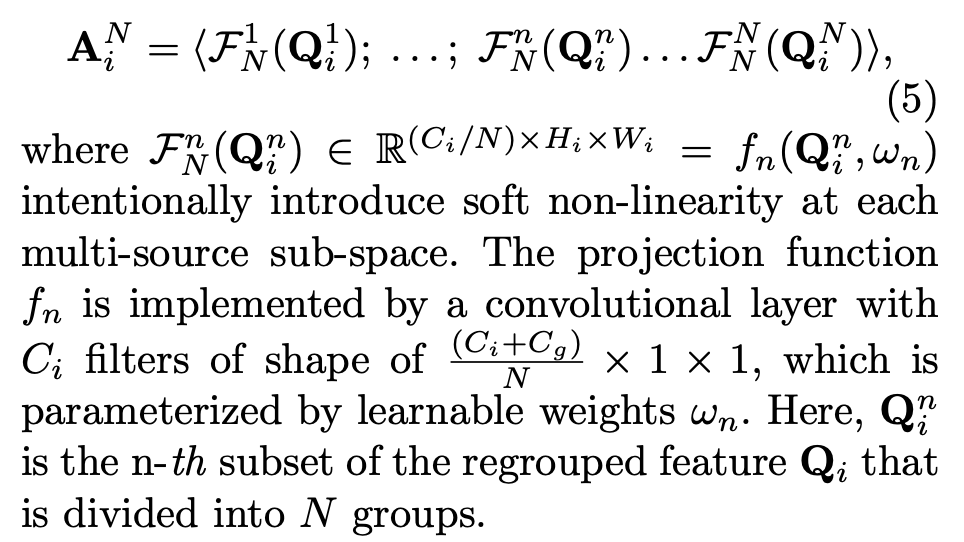

Gradient-Induced Transition

根据通道数进行拆分组合,获得 $Q_i$

Soft-grouping策略

为了获得物体的多尺度信息,采用平行非线性投射到细粒度子空间的设计

Parallel Residual Learning

引入了平行残差思想

解码器

Neighbour connection encoder(NCD)

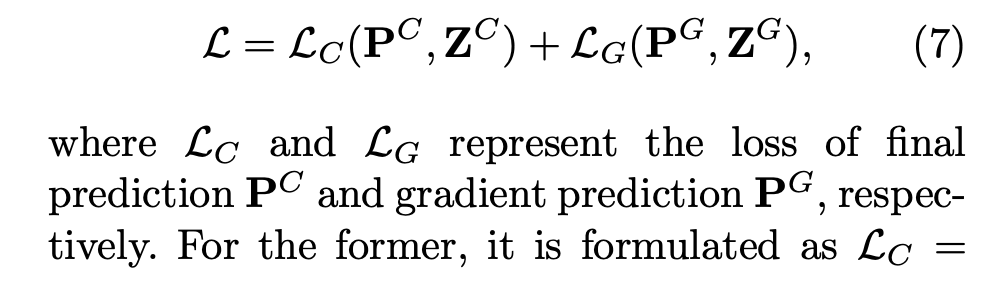

损失函数

$L_C$ 与 $L_G$ 均为加权IoU + BCE

训练策略

Adam + ImageNet预训练

The cosine annealing part of the SGDR strategy (I. Loshchilov and F. Hutter, “Sgdr: Stochastic gradient descent with warm restarts,” in ICLR, 2017) is used to adjust the learning rate, where the minimum/maximum learning rate and the maximum adjusted iteration are set to 10−5/10−4 and 20

Result-show

定量分析

定性比较

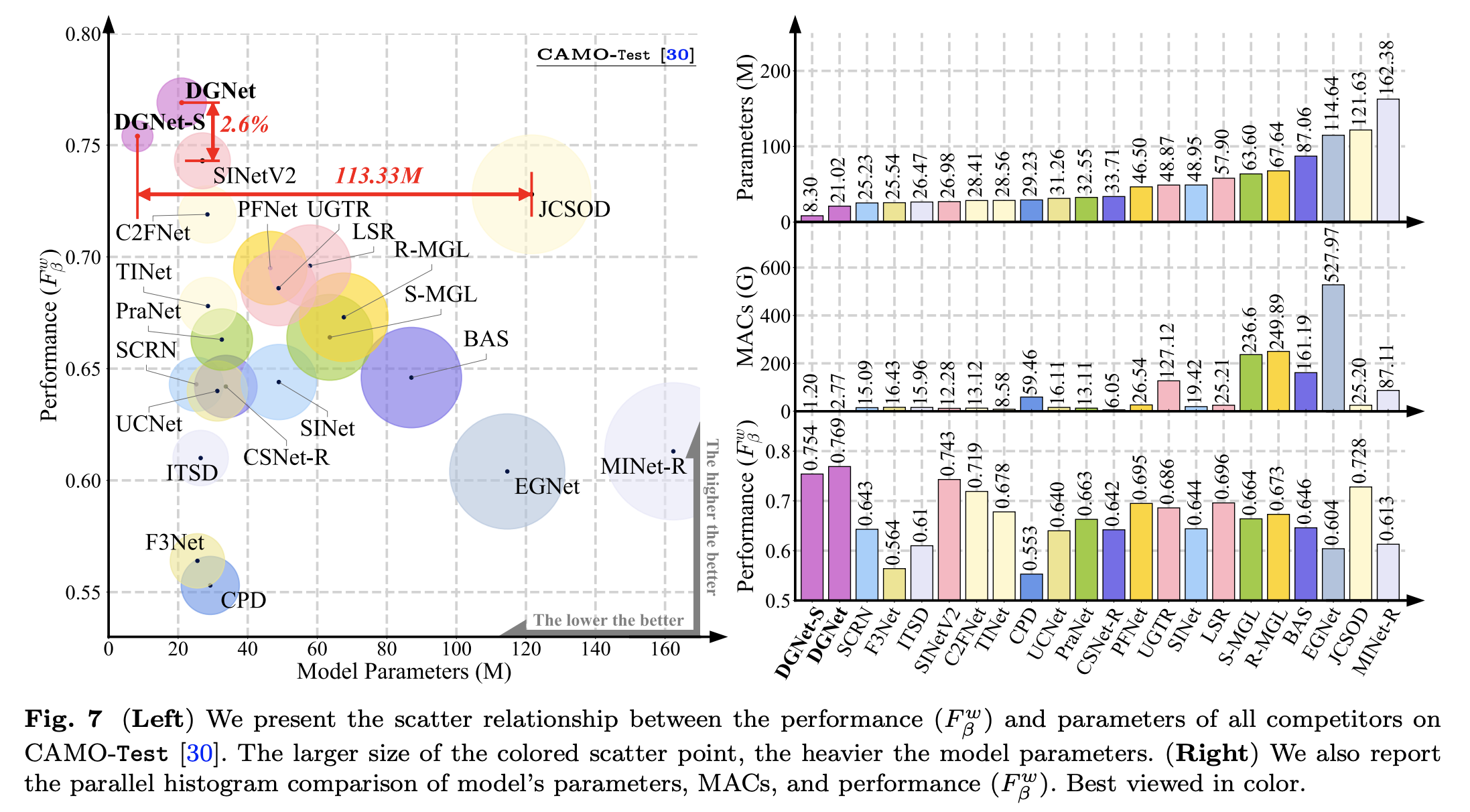

Efficiency Analysis

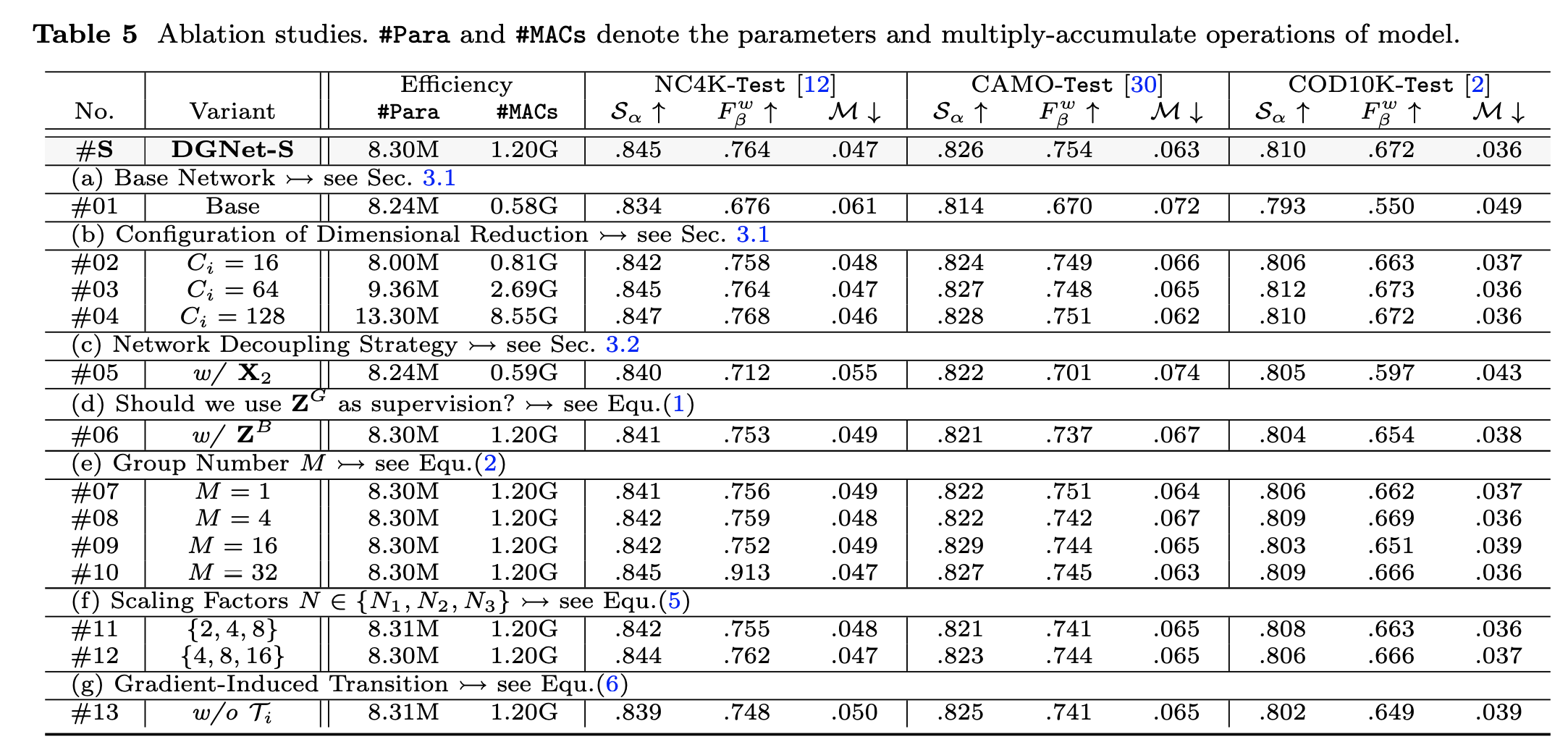

Ablation study

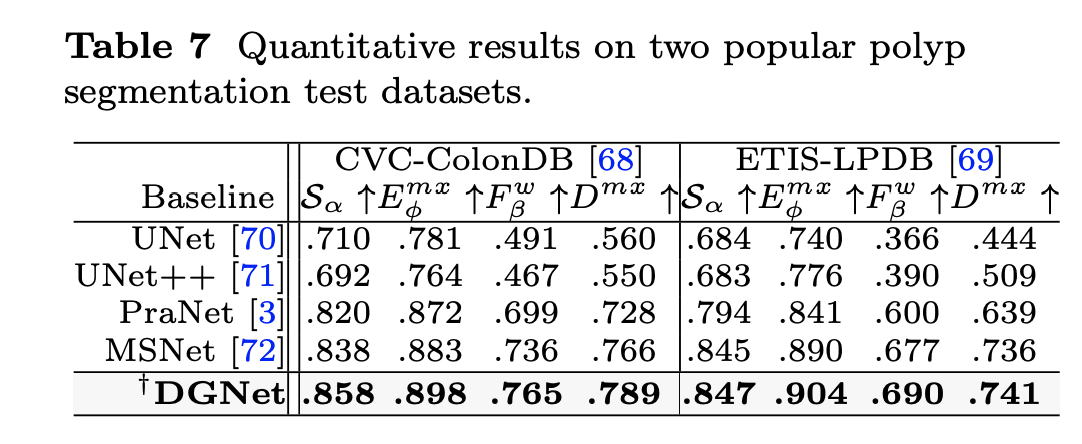

Downstream applications

启发和思考

- 深层特征与浅层特征先分离解码再分组、分尺度重整合

- 引入Gradient-induced mask,与传统的mask一起,多任务学习,有效提升了COD任务性能

- 如何对trade-off进行比较与撰写,很好的示范

核心代码

1